Where is SharePoint Customization going in 2017

/I'm actually pretty terrible at gambling the future of Technology. But I like writing these posts because it makes me sit down and think.

User Experience: SPFx is king

The user experience is dominated in 2016 with the news of SharePoint Framework (SPFx). Which shifts development and toolchain to a more modern JavaScript Stack: NodeJS, Yeoman. WebPack. There are component libraries like Office UI Fabric, but we explored options such as Kendo UI and UI Bootstrap and there are many benefits too. In 2017, SPFx should come down to On-Premises too, we'll have to wait and see what that looks like.

Sure - there are still plenty of gaps where SPFx can't cover - many of us are looking to a solution that allows us to do a site-level script injection or something that will let us do a Single Page Application override. But I'm very bullish on the SPFx. I think 2017 will rock.

https://github.com/SharePoint/sp-dev-docs

Frameworks: Angular or React

React continues to better suit component development, and Angular might catch up, but we'll see. In SPG, we are divided into both camps, and for people that aren't familiar with Angular, I am not opposed to them learning React.

I am however, dead serious that no one should try to learn both Angular and React at the same time. One need to pick one, master it, then the concepts of the other framework will map in the mind and come naturally. Learning both at the same time without mastering either of them will screw one's learning path. Don't risk this.

Have an ASPNET MVC background? Angular will make more sense. Want a more code / component based approach? Then React will make more sense. Pick one and run with it.

I have picked up Angular now and am quite happy with it. Feel free to reach out with Angular+SharePoint questions. I can't help with React questions.

SP Helper: PnP-JS-Core

I have high hopes that PnP-JS-Core will continue to gain popularity and wrap around the SharePoint REST Services well. I can totally see a few blog posts in the future using NodeJS, PnP-JS-Core inside an Azure Function to manage SharePoint Online.

As a bonus, it works really well with On-Premises too. Congrats on hitting 2.0.0 Patrick!

- https://github.com/SharePoint/PnP-JS-Core

- https://blogs.msdn.microsoft.com/patrickrodgers/2017/01/23/pnp-jscore-2-0-0/

Build tool: Webpack

Pick up webpack - do it now. gulp and grunt are no longer the right tools that manage the build process. Use them to automate the tasks around the build.

The rise of command-line-interface CLI tools will be the theme of 2017. We have angular-cli, create-react-app, SharePoint Framework has its own yeoman template.

CLI is just a command line's way of "new file wizard"

Dashboards: Power BI

We did a bit of work with embedding Power BI dashboards into SharePoint, and with the rapid pace of releases of upcoming Power BI SPFx, Push data with Flow to PowerBI and PowerBI Streaming Datasets - it will become increasing no brainer to use Power BI to build your reporting dashboard and embed them into your sites. With a local gateway, Power BI works for on-premises data too.

Forms: ???

I would like to say Power Apps is the thing. But it's not, not yet. The reason I say this is that business wants Forms. They want forms to replace paper processes. They want forms to replace older, aging digital forms (InfoPath).

Power Apps isn't trying to be a Form. It is trying to be a Mobile App-Builder. It might get there one day. But I'm not sure if that's the course they have set.

I was thinking Angular-Forms for heavy customizations and Power Apps for simple ones.

I'm open to suggestions.

Automation: Flow / Logic Apps + Azure Functions

Several products hit the scene - Flow, Logic Apps are fairly basic. But once you pair them up with an Atomic Hammer (Azure Functions), then everything will look like a nail and everything's easy.

The way I see it, Flow is better for single events and Logic Apps is better for sets of data.

And don't get me started on the dirt cheap price point too.

Server Code as a Service: Azure Functions

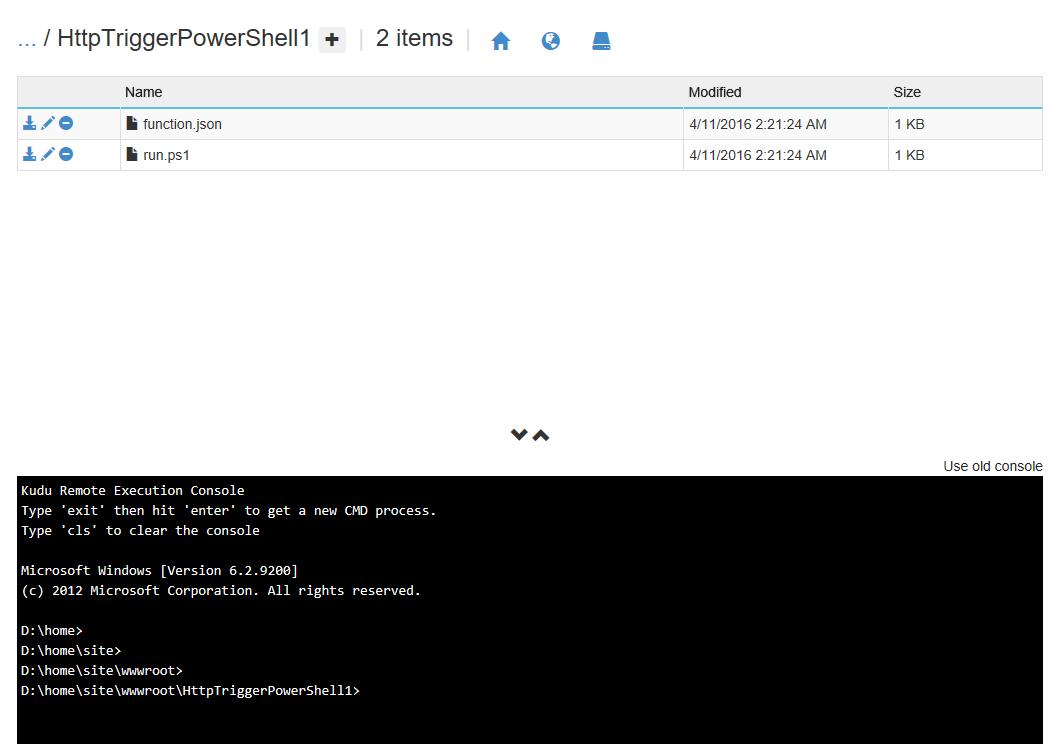

You've probably seen from my recent posts where I rant on and on about Azure Functions. I truly see it as the ultimate power to do anything that we need a server to do.

- Any Elevate Permissions task - in Workflow, Flow, Logic Apps or JavaScript Front-End Application

- Any long running task

- Any scheduled task

- Any event response task - especially combined with the newly released SharePoint Webhooks

- Any task that requires you to build a set of microservices for your power user to build complex Flows or Power Apps

- Choose any language you like: C#, NodeJS or PowerShell, in fact, mix them in your microservices - nobody cares how it runs, and it's great.

Auth Helper: ADAL, MSAL

We can't escape this. As soon as we begin to connect to various APIs we'll need authentication bearer tokens.

- Learn adal-js and adal-node for javascript.

- Learn ADAL for .NET

- Learn MSAL for .NET (preview of near future)

- Follow God of Identity: Vittorio

We seem to have better and better helper libraries as time goes on. But regardless, in 2017 - we will need to be able to auth to the Microsoft Graph in any language that we use.

Julie Turner has an excellent series:

Summary

And these are my picks for 2017. Do let me know what you think - I'm really interested in points where you disagree with my choices, because I want to learn.