Building Binary output service with Cognitive Services and Microsoft Flow

/We covered how to do binary webservices with Microsoft Flow. A question then lingers in my mind. If you can push binary data into a Flow, and within the Flow you can pass it around... Can you output a binary file from Flow?

This question bothered me so much in my sleep, I decided to test it and write this blog. And thus, we have probably the simplest example of the series.

- So we will first build a service endpoint that can return binary data.

- Then we will send it through cognitive services and tag some data as we go.

This is a post in a series on Microsoft Flow.

- JSON cheatsheet for Microsoft Flow

- Nested-Flow / Reusable-Function cheatsheet for Microsoft Flow

- Building non-JSON webservices with Flow

- One Connection to Proxy Them All - Microsoft Flow with Azure Functions Proxies

- Building Binary output service with Cognitive Services and Microsoft Flow

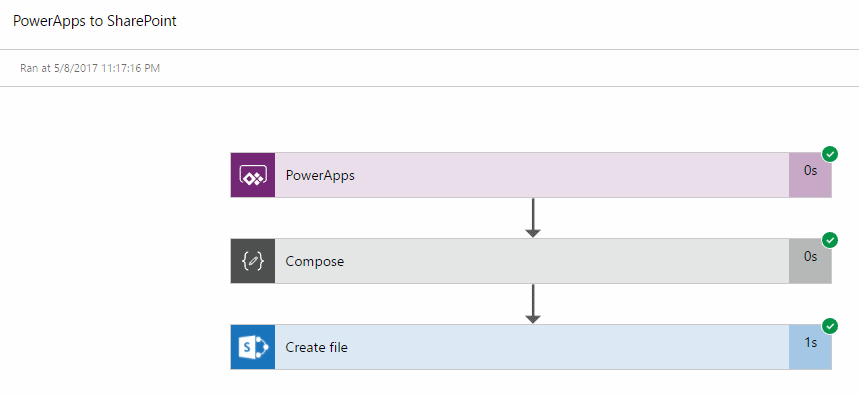

Build a Flow to output non-text Output

The method needs to be set to GET. Take a image that's authenticated in SharePoint, and set that to be the response output.

Test this with Postman

A few things to note:

- The request is a GET request.

- It replies with image/png (content type was automatically worked out)

- ... and that's it, there's not a lot to say

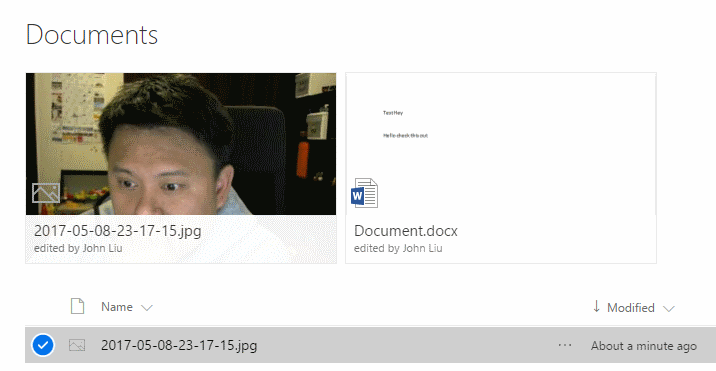

Add Cognitive Services - Computer Vision

You'll need to create a Cognitive Services in your Azure Subscription. The free tier offers 5000 images per month, at 20/minute.

We are taking the output of the tag action and adding that to the tags header in the service response.

And here we have the same image, but now with tags in the output.

Smart dogs.

Why do we need this?

- This means - we can post image in, and we can get image out

- May be you need to proxy a resource within SharePoint that is authenticated - but you want to use it directly as a file. If you use a SharePoint Sharing link it'd take you to a page.

- With this direct link to the file, you can use this as an anchor within HTML, or use this to upload a file to an external system (via URL).

- May be this isn't a file, but a generated ZIP file that you want to copy somewhere else. Or it is a docx file.

- Or perhaps you want to send a picture to a Flow, then resize it or run it through cognitive services before getting back the result.

- May be you are just mad and want to auto-tag every image in your SharePoint?

That actually sounds amazing.

Because Microsoft Flow lets us push binary through actions, I think there's a bunch of interesting scenarios for this.

Also, I think assistant branch manager and branch manager are awesome.