field notes using Power Automate with Power BI

/I have been working at a client learning, testing and building Power BI reports, datasets, dataflows, datamart and dashboards for the last 8 months. With a fairly healthy dose of Power Automate thrown in. I wanted to take a short breather and write down some of the learnings so far. I also would really like to hear your feedback regarding what your Power BI best practices are.

I will be presenting my experiences and examples in the Australian Digital Workplace Conference next week in Melbourne. I hope I’ll be seeing you there.

The system components

Call the API of a custom business application to retrieve project & product data, the system uses a NoSQL database, so we are pulling out pages of JSON via Power Automate.

At the moment, we store these JSON files into a document library in SharePoint.

Use Power BI dataflow to process and merge these JSON data into a staging table.

Use Power BI dataflow to transform data from the staging dataflow to actually do work.

Reports use the staging dataflow, and reference it if additional local transformations are needed.

Fancy visuals with Deneb and HTML content visuals.

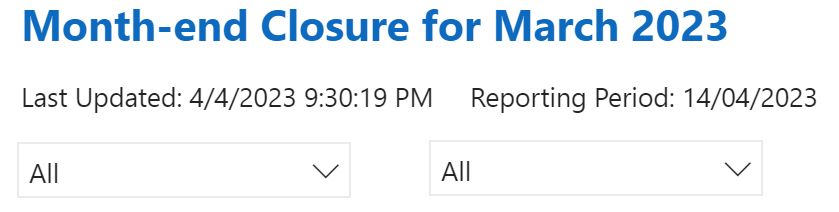

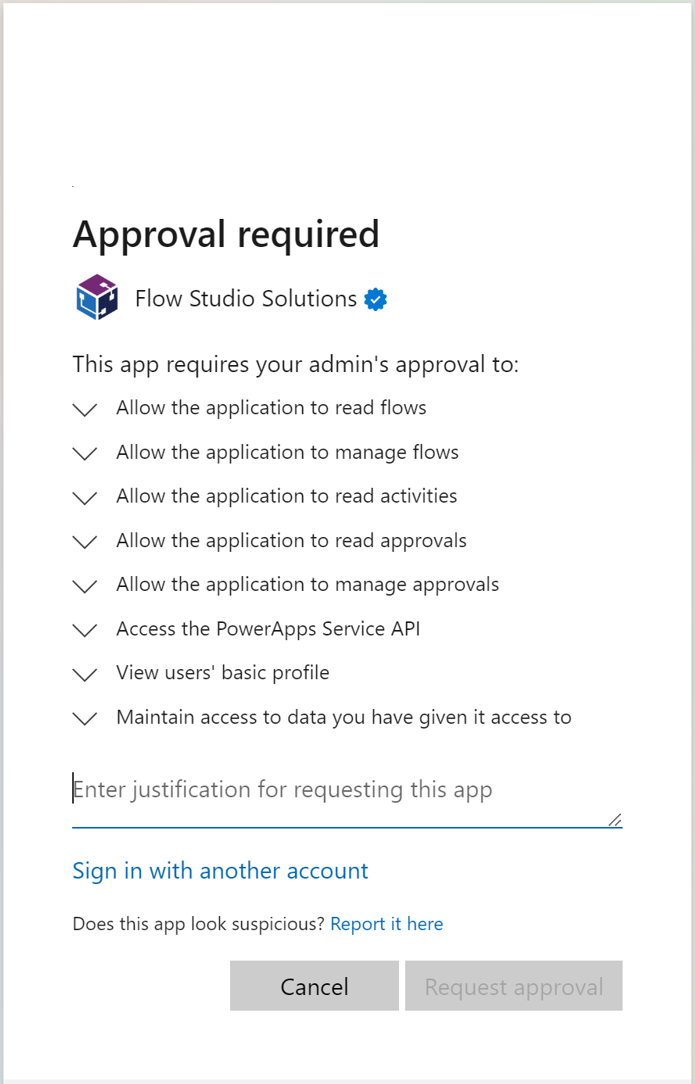

Calling APIs - we are using HTTP request and calculating nonce and api-key within Power Automate - this wasn’t something Power BI can call directly without some middleware. In particular, we are interested in a subset of projects from our dataset - so every evening, Power Automate calls Power BI Dataset Query, fetch a list of project codes and make API calls. It also checks if there’s been a change from the version stored in SharePoint and skips writing if the JSON has not been modified. (Unfortunately, we don’t have a last modified metadata from source).

We put JSON data into a SharePoint library and store them by months - this is because the business has a monthly reporting cycle, and we wanted data captured against last month vs this month. An alternative would be to use Azure Blob Storage for this staging area, because once we reached several hundred JSON files, SharePoint often throws too-busy error to Power BI during data refresh.

On re-use, we tried a myriad of methods, and we found using two dataflows to work the best. The first dataflow provides raw json content per file from SharePoint. This dataflow is configured for incremental refresh, so if the JSON isn’t updated, this doesn’t need to refresh in the dataflow (this solves our file too busy problem). Datamart can’t easily be used within the ETL of another dataflow (it’s more suitable for direct query).

Our second dataflow is where we do the transformation of the json data navigate the JSON structure and pull out records, lists accordingly.

We try to have very light local transformation or modification within the Power BI report. Instead, as much as possible, we want to run the transform within the dataflow.

This is a high-level overview blog post and there’s quite a bit more (smaller, bite sized) notes I want to write down in time, but this will do for now, and we’ll leave the rest for a future discussion.

Other design decisions, notes and thoughts

What Power Automate brings to the table.

What Power BI Dataflows brings to the table.

Notes on using DAX and Power Query M

Using Dataverse

Considerations with using SharePoint or Azure Blob Storage

Licensing costs