Tips on fixing Power BI dynamic value

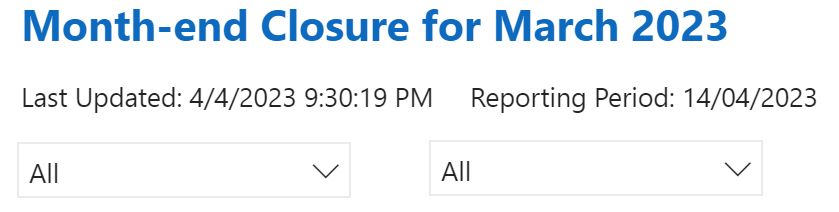

/In Power BI we have the ability to use dynamic value within a text block, this allows us to create dynamic paragraphs with data that automatically update.

The problem is, the way we specify the dynamic value is via Power BI Q&A - this can be a giant hit or miss, when it miss - it’s a frustrating experience.

Tip 1 - just use a measure (with a unique name)

The first tip then is to create our own measure with a unique name so that it can’t be confused with anything else. Within our measure, we can use DAX and write specifically what we want the dynamic value to be.

We can add a Card visual to inspect what the value of our measure is. This makes debugging the measure quite simple.

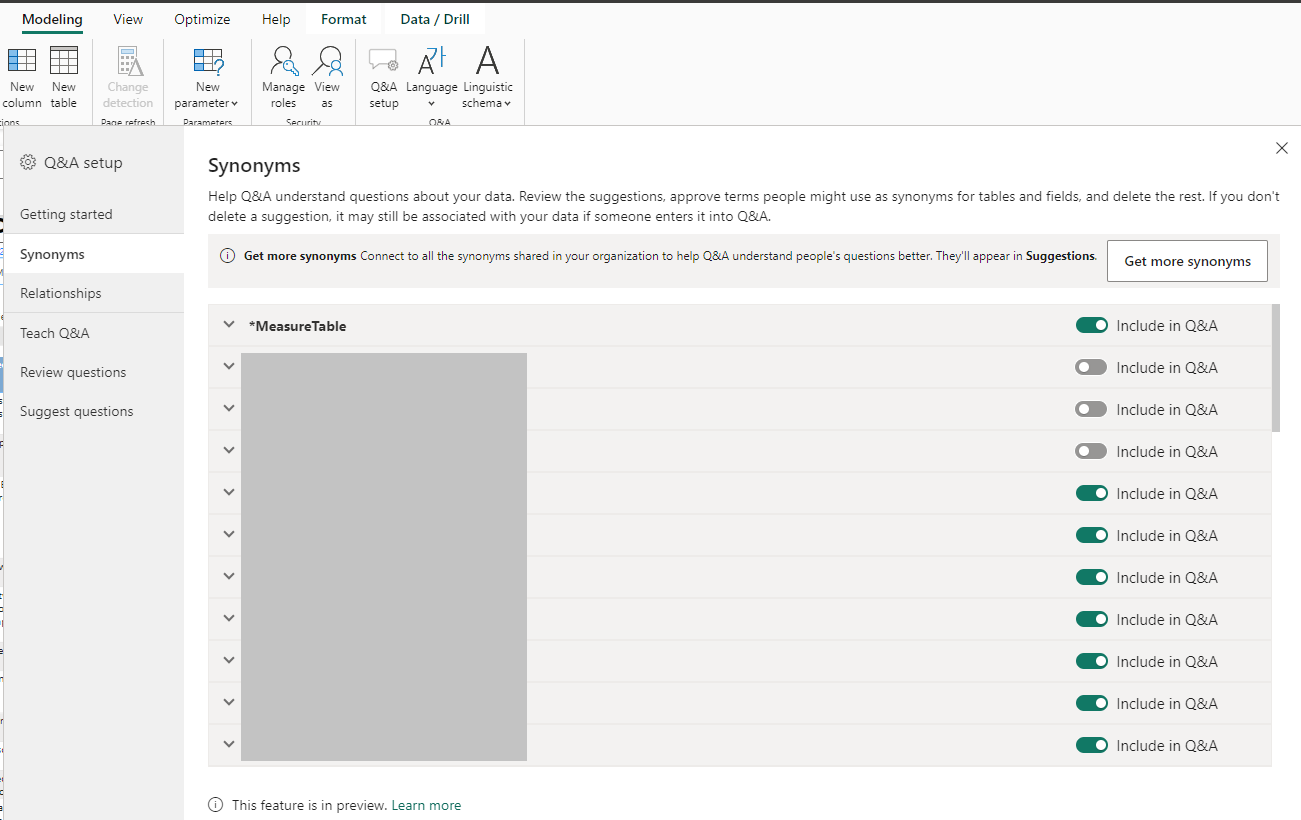

Tip 2 - use the Q&A setup, particularly, to set up the synonyms

I encountered a new problem today, no matter what I tried - Power BI did not recognize the measure (or even the new table that I was looking for). I went into Q&A setup and added simpler, unique names to the measure as well as the table via the Synonyms dialog within Q&A setup, but Power BI was just unable to recognize it.

Tip 3 - too many entities

I tried to export the Linguistic schema (beware this is a YAML file) to see what’s wrong with the model, and that’s when I got this new error message.

So I went back to the Synonyms dialog and unchecked “Include in Q&A” on several entity tables that did not need to be included, and as soon as I did this, dynamic value suddenly can find my measure by name, and link up what I want to render correctly.

I wanted to write this down as it may help others. This ate 2 hours of time for two people, and we created a bunch of rubbish name entities and measure names that I now need to go clean up…