Two ways to convert SharePoint files to PDF via Flow

/This blog post is divided into three sections: The easy, The Auth and The Complete parts.

Microsoft Flow released a new power to Convert Files to PDF. This made my October. So of course we have to play with this.

Part 1. The Easy

Now this work well, but raises a few questions:

- Why do I have to copy to OneDrive for Business?

Because the Convert File action is also available for OneDrive for consumer, but not SharePoint

- Can I do this without copying to OneDrive for Business

Not with the default Actions for now. There's no Convert File for SharePoint Connector. And SharePoint Connector's Get File Content action doesn't allow a format parameter.

And this is the simplest solution.

Warning: Next be dragons (Auth and API)

We are going to dive in to see what API this uses. And whether we can call the same API on SharePoint library document directly without copying the file to OneDrive first.

This next part is good for you. But it is heavy and will look complicated. Brace yourselves.

...So what API does this use?

https://docs.microsoft.com/en-us/onedrive/developer/rest-api/api/driveitem_get_content_format

GET /drive/items/{item-id}/content?format={format}

GET /drive/root:/{path and filename}:/content?format={format}Specifically, this uses the Microsoft Graph

Part 2. The Auth

Disclaimer - OAuth looks familiar, but steps are always tricky. Easy to mess up. So if you are following this through, walk carefully.

For the next part, we need to connect to MS Graph with AppOnly permissions

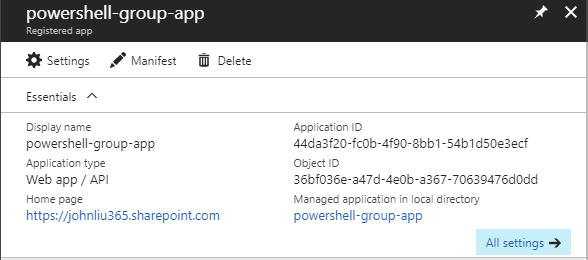

In Azure Portal - under Azure AD - create an App Registeration (I'm reusing a powershell-group-app one I had previously baked)

We will be accessing files - so make sure Application Permissions for read files is granted. This requires admin consent.

Via the Azure AD portal - hit Grant Permissions to perform admin consent directly.

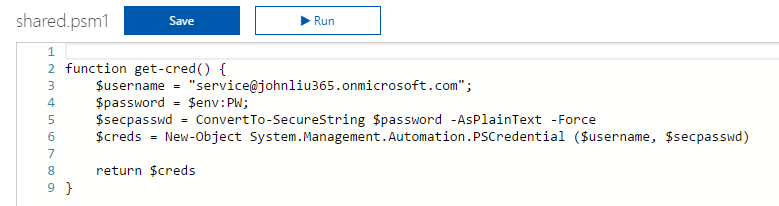

Now we are going to write the Flow with HTTP requests

hit the token endpoint for our tenant with a POST message. The Body must be grant_type=client_credential with client_id, client_secret and the resource is https://graph.microsoft.com

this request if successful will give us back a JSON. Parse JSON with this schema:

{

"type": "object",

"properties": {

"token_type": {

"type": "string"

},

"expires_in": {

"type": "string"

},

"ext_expires_in": {

"type": "string"

},

"expires_on": {

"type": "string"

},

"not_before": {

"type": "string"

},

"resource": {

"type": "string"

},

"access_token": {

"type": "string"

}

}

}This gives Flow a variable for access_token for the remainder of the steps to use to call Microsoft Graph

Test this by calling the MS Graph endpoint for SharePoint site

This HTTP request with the Bearer access_token successfully returns SharePoint site data from Microsoft Graph.

Part 3. The Complete Solution to fetch SharePoint document as PDF

Call /content?format=PDF

A few things going on in this result.

- Flow thinks this request has failed - because it doesn't return a 2xx status. It returns a 302 redirect.

- The Response header contains the Location of the redirect, which is where the PDF file is

Parse JSON again on the Response header.

{

"type": "object",

"properties": {

"Transfer-Encoding": {

"type": "string"

},

"request-id": {

"type": "string"

},

"client-request-id": {

"type": "string"

},

"x-ms-ags-diagnostic": {

"type": "string"

},

"Duration": {

"type": "string"

},

"Cache-Control": {

"type": "string"

},

"Date": {

"type": "string"

},

"Location": {

"type": "string"

},

"Content-Type": {

"type": "string"

},

"Content-Length": {

"type": "string"

}

}

}We just want Location. We also need to configure Continue on previous HTTP error.

And finally, retrieve the file via GET again

When ran, the flow looks like this:

Summary

The complete solution uses HTTP to call MS Graph directly and pulls back the PDF file after a 302 Response. This is a fairly complex example so please evaluate whether you want the Correct Way or the Easy Way.

Note also that Microsoft Flow has a Premium connector for Azure AD Requests - which will negate the middle part of this blog post re: Auth and let you dive right into MS Graph REST endpoints without worrying about access_tokens.

Call this Flow request and it downloads the PDF file, converted from a DOCX document in SharePoint team site.

Review Special Techniques Invoked:

- MS Graph Auth

- The Continue on Error configuration

- Parse JSON on Response Header