Serverless connect-the-dots: MP3 to WAV via ffmpeg.exe in AzureFunctions, for PowerApps and Flow

/There's no good title to this post, there's just too many pieces we are connecting.

So, a problem was in my todo list for a while - I'll try to describe the problem quickly, and get into the solution.

- PowerApps Microphone control records MP3 files

- Cognitive Speech to Text wants to turn WAV files into JSON

- Even with Flow, we can't convert the audio file formats.

- We need an Azure Function to gluethis one step

- After a bit of research, it looks like FFMPEG, a popular free utility can be used to do the conversion

Azure Functions and FFMPEG

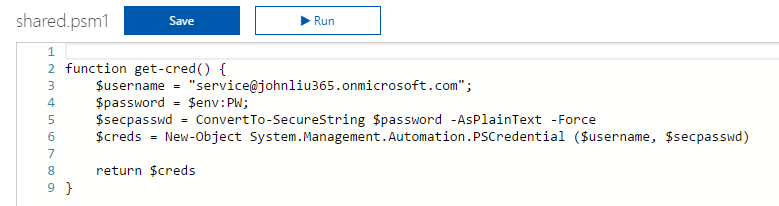

So my initial thought is that well, I'll just run this utility exe file through PowerShell. But then I remembered that PowerShell don't handle binary input and output that well. A quick search nets me several implementations in C# one of them catches my eye:

Jordan Knight is one of our Australian DX Microsoftie - so of course I start with his code

It actually was really quick to get going, but because Jordan's code is triggered from blob storage - the Azure Functions binding for blob storage has waiting time that I want to shrink, so I rewrite the input and output bindings to turn the whole conversion function into an input/output HTTP request.

https://github.com/johnnliu/function-ffmpeg-mp3-to-wav/blob/master/run.csx

#r "Microsoft.WindowsAzure.Storage"

using Microsoft.WindowsAzure.Storage.Blob;

using System.Diagnostics;

using System.IO;

using System.Net;

using System.Net.Http.Headers;

public static HttpResponseMessage Run(Stream req, TraceWriter log)

{

var temp = Path.GetTempFileName() + ".mp3";

var tempOut = Path.GetTempFileName() + ".wav";

var tempPath = Path.Combine(Path.GetTempPath(), Guid.NewGuid().ToString());

Directory.CreateDirectory(tempPath);

using (var ms = new MemoryStream())

{

req.CopyTo(ms);

File.WriteAllBytes(temp, ms.ToArray());

}

var bs = File.ReadAllBytes(temp);

log.Info($"Renc Length: {bs.Length}");

var psi = new ProcessStartInfo();

psi.FileName = @"D:\home\site\wwwroot\mp3-to-wave\ffmpeg.exe";

psi.Arguments = $"-i \"{temp}\" \"{tempOut}\"";

psi.RedirectStandardOutput = true;

psi.RedirectStandardError = true;

psi.UseShellExecute = false;

log.Info($"Args: {psi.Arguments}");

var process = Process.Start(psi);

process.WaitForExit((int)TimeSpan.FromSeconds(60).TotalMilliseconds);

var bytes = File.ReadAllBytes(tempOut);

log.Info($"Renc Length: {bytes.Length}");

var response = new HttpResponseMessage(HttpStatusCode.OK);

response.Content = new StreamContent(new MemoryStream(bytes));

response.Content.Headers.ContentType = new MediaTypeHeaderValue("audio/wav");

File.Delete(tempOut);

File.Delete(temp);

Directory.Delete(tempPath, true);

return response;

}Trick: You can upload ffmpeg.exe and run them inside an Azure Function

https://github.com/johnnliu/function-ffmpeg-mp3-to-wav/blob/master/function.json

{

"bindings": [

{

"type": "httpTrigger",

"name": "req",

"authLevel": "function",

"methods": [

"post"

],

"direction": "in"

},

{

"type": "http",

"name": "$return",

"direction": "out"

}

],

"disabled": false

}Azure Functions Custom Binding

Ling Toh (of Azure Functions) reached out and tells me I can try the new Azure Functions custom bindings for Cognitive Services directly. But I wanted to try this with Flow. I need to come back to custom bindings in the future.

https://twitter.com/ling_toh/status/919891283400724482

Set up Cognitive Services - Speech

In Azure Portal, create Cognitive Services for Speech

Need to copy one of the Keys for later

Flow

Take the binary Multipart Body send to this Flow and send that to the Azure Function

base64ToBinary(triggerMultipartBody(0)?['$content'])

Take the binary returned from the Function and send that to Bing Speech API

You'll need the key from the Cognitive Azure App earlier for Ocp-Apim-Subscription-Key

Flow returns the result from Speech to text which I force into a JSON

json(body('HTTP_Cognitive_Speech'))Try it:

Swagger

Need this for PowerApps to call Flow

I despise Swagger so much I don't even want to talk about it (the Swagger file takes 4 hours the most problematic part of the whole exercise)

{

"swagger": "2.0",

"info": {

"description": "speech to text",

"version": "1.0.0",

"title": "speech-api"

},

"host": "prod-03.australiasoutheast.logic.azure.com",

"basePath": "/workflows",

"schemes": [

"https"

],

"paths": {

"/xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx/triggers/manual/paths/invoke": {

"post": {

"summary": "speech to text",

"description": "speech to text",

"operationId": "Speech-to-text",

"consumes": [

"multipart/form-data"

],

"parameters": [

{

"name": "api-version",

"in": "query",

"default": "2016-06-01",

"required": true,

"type": "string",

"x-ms-visibility": "internal"

},

{

"name": "sp",

"in": "query",

"default": "/triggers/manual/run",

"required": true,

"type": "string",

"x-ms-visibility": "internal"

},

{

"name": "sv",

"in": "query",

"default": "1.0",

"required": true,

"type": "string",

"x-ms-visibility": "internal"

},

{

"name": "sig",

"in": "query",

"default": "4h5rHrIm1VyQhwFYtbTDSM_EtcHLyWC2OMLqPkZ31tc",

"required": true,

"type": "string",

"x-ms-visibility": "internal"

},

{

"name": "file",

"in": "formData",

"description": "file to upload",

"required": true,

"type": "file"

}

],

"produces": [

"application/json; charset=utf-8"

],

"responses": {

"200": {

"description": "OK",

"schema": {

"description": "",

"type": "object",

"properties": {

"RecognitionStatus": {

"type": "string"

},

"DisplayText": {

"type": "string"

},

"Offset": {

"type": "number"

},

"Duration": {

"type": "number"

}

},

"required": [

"RecognitionStatus",

"DisplayText",

"Offset",

"Duration"

]

}

}

}

}

}

}

}Power Apps

Result

Summary

I expect a few outcomes from this blog post.

- ffmpeg.exe is very powerful and can convert multiple audio and video datatypes. I'm pretty certain we will be using it a lot more for many purposes.

- Cognitive Speech API doesn't have a Flow action yet. I'm sure we will see it soon.

- PowerApps or Flow may need a native way of converting audio file formats. Until such an action is available, we will need to rely on ffmpeg within an Azure Function

- The problem of converting MP3 to WAV was raised by Paul Culmsee - the rest of the blog post is just to connect the dots and make sure it works. I was also blocked on an error on the output of my original swagger file, which I fixed only after Paul sent me a working Swagger file he used for another service - thank you!