Flow - lightweight fast template engine using Split twice

/Technique 2 — Building a Lightweight Template Engine (Using Split Twice)

Take this example (using a handle-bar stil syntax)

ABC {{def}} GHI {{jkl}} MNUsing a dictionary (Compose)

{

"def": "fish 🐟",

"jkl": "chips 🍟"

}

We want to build a mini-template engine.

Now you might think - ah ok, let’s get some variables in here.

But John really dislikes variables in fact most of these flow hacks are how to not use variables. So how do you do this without variables?

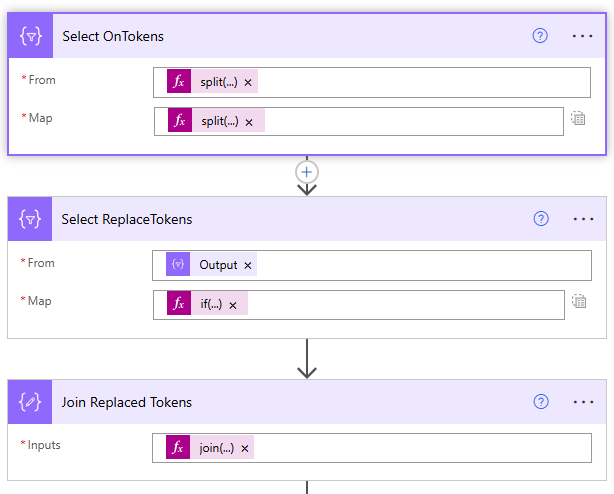

1. Split on {{

split(outputs('Compose'), '{{')

result

[

"ABC ",

"def}} GHI ",

"jkl}} MN"

]2. Split each on }}

inside each item, split again

split(item(),'}}')

So now our original string becomes either:

["ABC "]

or

["def"," GHI "]

Recap, combine step 1 and 2

Select:

from: split(outputs('Compose'), '{{')

item: split(item(), '}}')

3. Conditional replacement using dictionary lookup

if(

equals(length(item()),2),

concat(

outputs('Dictionary')?[item()?[0]],

item()?[1]

),

item()?[0]

)

Hidden insight

So the trick is this. If the row has 2 elements, that means the first element is a token, the rest is the remainder. If the row has only 1 element - then just return that.

[ item()[0], dictionary[item()[0]] item()[1], dictionary[item()[0]] item()[1] ] ==> [ "ABC", "fish 🐟 GHI", "chips 🍟 MN" ]

If you want your dictionary lookup to be case insensitive, you can add toLower() to wrap around item()?[0]

4. Join it all together

join(body('Select'),'')

Result

ABC fish 🐟 GHI chips 🍟 MN

No loops.

No apply-to-each.

No regex.

No variables.

Super fast zero-second action.

This technique lets you:

Build dynamic email templates

Create server-side HTML rendering logic

Replace merge fields safely

Do token replacement in Dataverse text

Build dynamic document generators

All using standard Power Automate expressions.