Do Group By in Microsoft Flow with two simple elegant loops

/This is a problem I worked on with Fausto and is a common problem I see when working with rows of data in Microsoft Flow.

Plan

- Scenario: Group By

- Doing Group By Elegantly

- Build it in two parts

- Run it quickly

Scenario: Group By

Group-by is a common problem - but usually we see this a lot in the form of batching rows of data to some sort of output. One frequent example is a schedule that looks up a bunch of tasks or messages for the day, and sends only ONE email with a group of tasks to that person.

Doing Group By Elegantly

How do we do Group-By elegantly, and quickly. Yes, group-by can be done with for-each and append-to-array, but that approach leads to fairly complex looking inner loops and wide-layout if-conditions.

The solution that we got at the end is an elegant one with two for-each loops. It is easy to follow and easy to test - which means you can easily reconfigure it to your own scenario.

Let's take an example

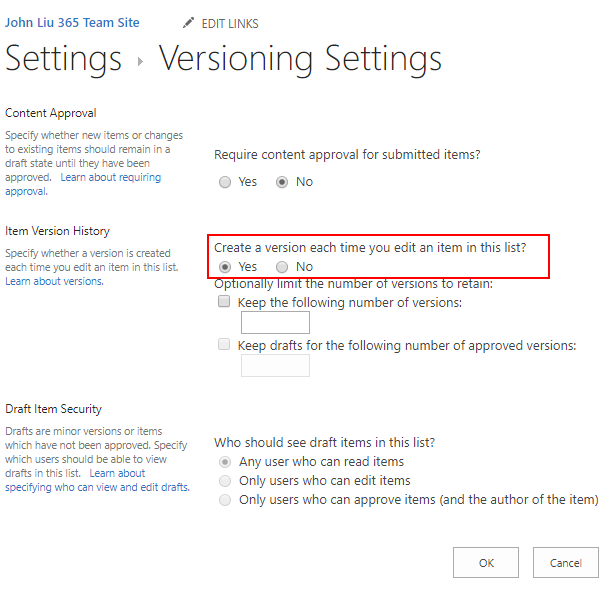

I have a list here with 6 rows from SharePoint and 3 names. In the first loop, we want to reduce the array of 6 rows into unique names:

[ "Gandalf", "Boromir", "John Liu" ]

Then in the second loop, we can loop over each of these unique names and built the corresponding list of rows for each name:

[{

"name": "Gandalf",

"titles": [ "Wizard", "Remember Password"]

},

{

"name": "Boromir",

"items": [ "One Does not Simply", "**SPOILER**"]

},

{

"name": "John Liu",

"items": [ "Making up lists", "Ninja"]

}]First Loop

In each loop, we use the union() expression to combine two arrays. Union has the special ability that if an element already exists in the array it is omitted.

union( ["Gandalf", "Boromir"], ["Gandalf"] ) => ["Gandalf", "Boromir"]

We do the union in a compose action, and then put that result back into the array variable.

In my example, I'm using SharePoint's people fields - so I'm stepping into item()?['Person']?['Email']

That's first loop. Easy to test - one expression.

Second Loop

Second loop involves looping through the first array of unique_names, and the first action stores that value into a compose step.

Then use Filter array to select only the rows with that unique name from the original table.

Then use Select to pick only the columns from the original table that we want to see. (The majority of the second loop looks more busy, because I'm using techniques from my other blog post "using select to simplify your Create HTML Table")

I append the results as an object to a running array of results. But it is also very easy to just send email at this point.

For each unique name (or email), this sends one email, the email body is a summary HTML table of the items in the list for this person.

Run it quickly

Expression operations like Compose, Filter, Select or Union are fast - most of the time running 0-second.

For Each steps and set variables are slower - because there are global locks being applied. By reducing use of variables, we can make the loop go much faster.

Elegant and fast.

Summary

Two elegant loops. No crazy if-conditions and checking if a value already exists in an array during append.

This is a very useful pattern if you are sending daily emails or summary notifications to a user and you want to batch the results.

I wanted to end here on more loops. Loopity loop.