Microsoft Flow: SharePoint Trigger on specific fields changed via SP HTTP Request

/A very common request in Microsoft Flow for SharePoint is a trigger that only runs when a certain field has changed, or when it has changed to a certain value.

With the new SharePoint "Send an HTTP request to SharePoint" action, we can now do this in relatively few steps with the help of List Versioning.

Plan

Enable List Versioning

Obtain item versions results on item change

A conditional fast exit

Understand the data formats and how to debug

Summary

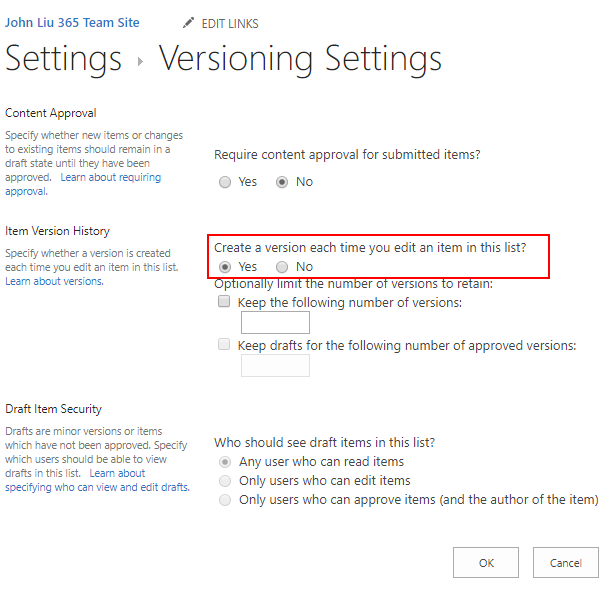

Enable List Versioning

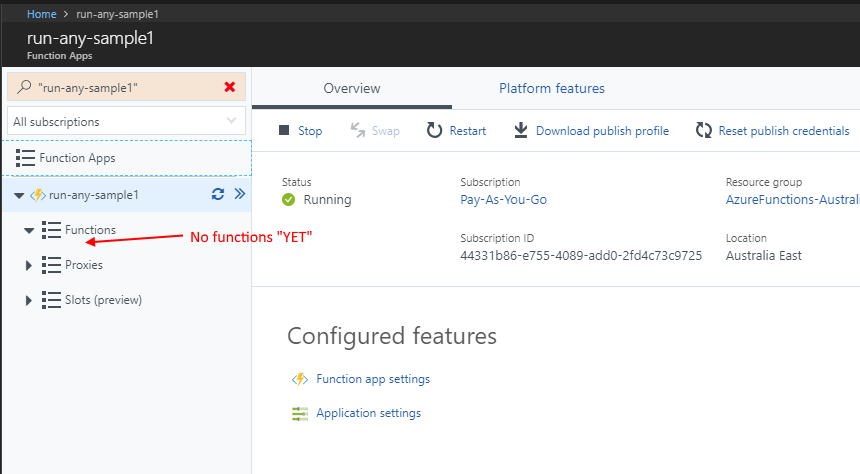

Obtain item versions results on item change

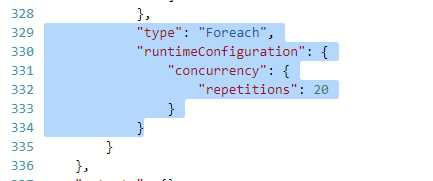

// SPREST-Versions "method": "post", "body": { "method": "GET", "uri": "_api/web/lists/getbytitle('ListWithHistory')/items(triggerBody()?['ID'])/versions?$top=2", "headers": { "accept": "application/json; odata=nometadata" } } // Select { "from": "body('SPREST-Versions')?['value']", "select": { "ID": "item()?['ID']", "VersionLabel": "item()?['VersionLabel']", "Title": "item()?['Title']", "Demo": "item()?['Demo']", "Completed": "item()?['Completed']" } }

Versions returns the latest version first.

But there are a few tweaks we can do here:

If we ONLY ever care about the latest two versions - we use $top=2

this covers the new version and the previous version.If we care about the latest versions within the last few minutes - we can filter with $filter=Modified gt 'last-5-minutes-date-expression'

The expression would be addMinutes(utcNow(), -5) but it should be formed in a separate action above.I have seen really complex mixed up Flow triggers with a lot of overlapping list item updates - I highly advise designing the workflow to not do that. This pattern here can help decouple those situations.

There are many fields on version endpoint - Select will clean it up to only the specific fields that we care about:

A conditional fast exit

// Condition-SameTitle equals(first(body('SelectVersions'))?['Title'], last(body('SelectVersions'))?['Title'])

Results

Results for success and failure.

This check can be performed with ~4 actions, as part of the pre-condition check for whether to continue the Flow.

Understand the data formats and how to debug

Understanding this step is interesting for debugging purposes. Since we are calling REST and getting back JSON, we need to know how to read it and

Run this, and we'll see the default output which is

But for our needs - it can be a lot easier if we apply additional header.

With either method, we end up with one of these expressions to get to the result array of versions:

body('SPREST-Versions')?['d']?['results']

body('SPREST-Versions')?['value']

Summary

Tip 1 - use accept header to get simpler result

Read the output to understand the return JSON format

Tip 2 - using $top=2 is simpler than Tip 3.

Tip 3 (advanced) - using $filter to compare whether just the last two versions or changes as a batch

Tip 4 (advanced) - on the created event - there will be only one version. So

first(body('SelectVersions'))?['Title']

last(body('SelectVersions'))?['Title']

refers to the same row.

We can force comparison by:

body('SelectVersions')?[0]?['Title']

body('SelectVersions')?[1]?['Title']

The second row [1] doesn't exist, but ? will replace the query with null.Use expressions to navigate the JSON structure

Use Select to simplify the result array

Remove complexity in overlapping Flows hitting update on the same list item by making sure Flows exit early when not required - this will simplify a lot of our Flow designs

Tip 5 (advanced) - in the SelectVersion step - do not include the VersionLabel then in the comparison step - compare the two JSON objects string(first(body('SelectVersions'))) and string(last(body('SelectVersions'))) directly. This allows us to check all the fields together.